Backing up Slack on Iceberg with dlt & Dagster

A sweet combo that could use some polish

Recently, I’ve been considering how to save conversations from various Slack workspaces that aren’t on a paid plan. With the free plan limiting message history to 90 days, it’s frustrating to lose valuable discussions. While trying to revisit past conversations, I realized I needed a reliable way to preserve this history.

Naturally, I wondered: hasn’t someone solved this problem already? The answer is yes! There are plenty of tools and scripts available on GitHub, such as slackdump or slack-backup.

However, these projects come with a significant challenge: keeping backups up-to-date. Do you set a reminder every 90 days to rerun the script? If you back up earlier, how do you handle duplicate data? And what happens if Slack's API goes down for a day or two during your backup window?

Why dlt & Dagster

I’d been exploring dlt for a while, waiting for the right opportunity to try it out. When I found its Slack integration, I immediately thought, “This could be the solution I need!” Further searching led me to the dlt-slack-backup project, which seemed to check all the boxes.

That said, a few caveats came to light:

Bot Permissions: The dlt implementation relies on a Slack App. The “bot” must join every channel you want to back up. This requires a custom script to automatically add the bot to all relevant channels.

Inefficient Data Retrieval: The backup job fetches all messages from the start of time (2000-01-01) up to the present. While this isn’t a huge issue for small workspaces or the 90-day history limit, it feels inefficient for larger teams.

GitHub Actions for Scheduling: While GitHub Actions is excellent for CI/CD workflows, it lacks features that modern workflow schedulers offer, such as observability, error handling, and dynamic scheduling. Additionally, partitioned job management—a must-have for efficient backups—can be handled seamlessly with Dagster, which lets you schedule backups for specific days.

Output to Google BigQuery: BigQuery is a convenient storage solution—it’s easy to set up and use. However, relying on it means stepping out of the open-source ecosystem. If you already use BigQuery, it’s a no-brainer, but for others, this could be a limitation.

Iceberg ?!

Iceberg has emerged as the frontrunner in the open table format wars, boasting the most integrations across the industry. This made it my top choice for reading and writing data. However, there’s one limitation: since dlt doesn’t support a catalog, the only metadata provider available is metadata files. While this isn’t a dealbreaker, it can be cumbersome.

For instance, in my pyiceberg script, registering tables from metadata file paths is still a manual process. My current approach—selecting the latest metadata file based on the alphanumerical order of file names—might not even be correct. Beyond this inconvenience, Iceberg shines as a format. I was able to read tables using DuckDB, although this also required knowledge of the latest full metadata file path, which isn’t the most user-friendly.

The project

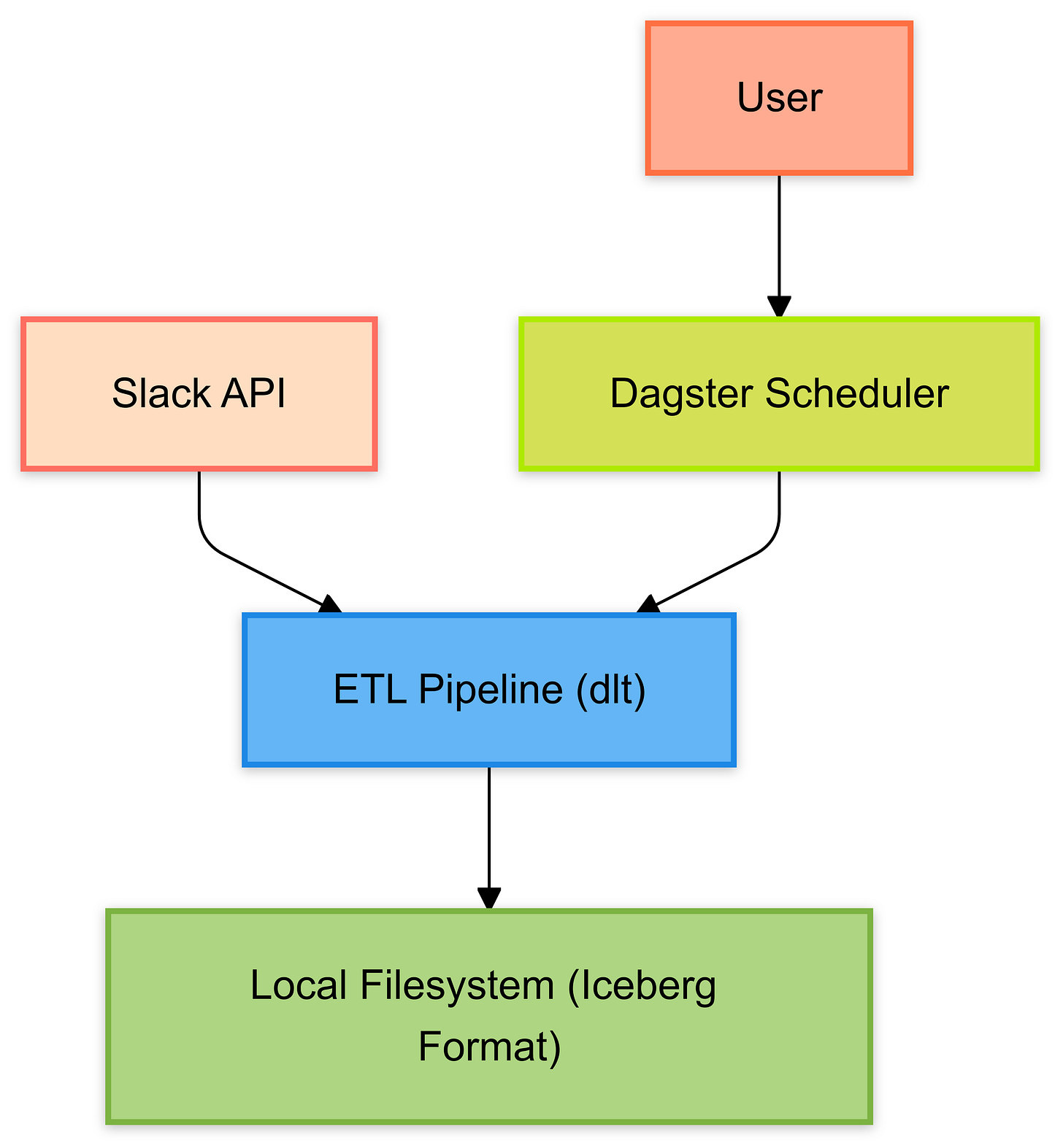

Here’s a high-level architecture diagram of the project I built:

The project source code is available on Github on https://github.com/Kayrnt/dlt-iceberg-slack-backup.

The project structure is straightforward:

.dlt: Contains the configuration and secrets.data: The root directory for Iceberg storage.slack: The dlt Slack integration, created via the dlt CLI.slack_backup: Dagster code for the pipeline.slack_backup_tests: Intended for Dagster pipeline tests, though skipped for this proof of concept (🙈)..env: Configuration for Dagster.browse_messages.py: A Python script demonstrating how to consume the output in Iceberg format.

Learnings & feedbacks

Although I had read about both dlt and Dagster before, I’d never used them. I found both surprisingly easy to get started with—just like the Slack API.

Slack API

The Slack API is relatively straightforward, especially with Python, thanks to its SDK. However, it has some frustrating limitations. For instance, you can’t operate “as yourself” without creating an app and obtaining a personal token. This process requires workspace administrators to install the app, adding an extra layer of friction. It’s not surprising that tools like slackdump rely on Slack client authentication instead. While I understand that Slack’s approach is designed to prevent unrestricted access to messages, users, or channels, it still feels limiting for personal use cases.

dlt

Bootstrapping the dlt project was faster than I expected. The example pipeline aligned closely with what I needed, and the documentation was clear. I made some minor tweaks, such as adding logic to join all public channels for data retrieval.

The only area where I faced challenges was with the destination setup. By default, dlt uses DuckDB, which is a database engine. However, for writing to formats like Delta or Iceberg, you’re dealing with table formats (`table_format` as a pipeline option) rather than engines. While this distinction makes sense—destinations should match the data sink (e.g., AWS S3, GCS, local filesystem, or Snowflake for Iceberg)—it initially confused me.

Dagster

When it came to scheduling, I wanted to explore Dagster because I’m a fan of its asset-based approach, which contrasts with the task-based standard of most workflow schedulers. The developer experience was excellent—Dagster can be installed locally via Python package managers, and its UX is intuitive. I plan to explore it further and compare it to Airflow when I get the chance.

Going Further

I originally turned to dlt for a Slack connector to save time, which it delivered. I also considered using SQLMesh on top of it, as it integrates with dlt. However, SQLMesh supports dlt resources, not sources, so it didn’t fully meet my needs. Since my focus wasn’t on running downstream models on Slack backup data, this wasn’t a priority.

Due to DuckDB's limited support for Iceberg, I’d likely need to fall back on PySpark for reading and writing datasets—less efficient but more flexible.

I’m curious to dive deeper into dbt and SQLMesh integrations with Dagster. Comparing them could be an interesting avenue for future exploration posts.

Since this pipeline leverages Apache Iceberg, having a proper catalog to explore tables would be a game-changer. As I’m using a local stack, managed services are off the table. However, Apache Polaris might be a good fit—it supports remote filesystems like S3 and local filesystems, and as part Iceberg REST API, it allows table registration.

That said, Polaris doesn’t currently offer a command or API to fully scan a root directory of an Iceberg data lake. Writing a small script to handle this isn’t difficult, but I hope future versions introduce “discovery” features to simplify this process.

Do you currently export or back up Slack data? If so, how do you approach it? If not, does the method I’ve shared here align with your needs? I’m curious to hear how others handle this process and how they “productionize” it to ensure it’s both scalable and easy to maintain. Feel free to share your thoughts in the comments!