I recently had the opportunity to travel to San Francisco to attend the GROUP BY conference organized by Tobiko Data. It was an exceptional chance to meet the team and share ideas with them. I'm hopeful that some of these discussions will materialize in one form or another!

The big day

The conference took place at the SVB Center, featuring a packed single-track schedule from 9 AM to 4 PM.

Tobiko's Roadmap

The founders (Tyson Mao, Toby Mao, and Iaroslav Zeigerman) kicked off the sessions with a presentation about the roadmap and Tobiko Cloud milestones. Here are the new features that I believe will differentiate the cloud version from the OSS version and potentially impact the industry:

Privacy regulation (GDPR, CCPA) assistance

Though privacy hasn't been a major focus in the industry over the past decade, tasks such as on-demand data retrieval or deletion are often challenging to implement correctly. I've personally worked on these topics, and they're frequently deprioritized because most people acknowledge that working on them is neither enjoyable nor rewarding, as they don't directly add business value (aside from avoiding huge fines or lawsuits).

Leveraging their position as a transformation layer to identify and offer easy ways to comply with regulations seems like a smart approach that could save substantial work for enterprise environments. This would allow teams to shift their focus toward building features that provide direct business value.

AI assisted migration to SQLMesh/Tobiko cloud

I have mixed feelings about this feature. I believe it would make sense for projects using custom frameworks, but for transformations already implemented in dbt, I would assume that SQLMesh's dbt project compatibility would be more reliable for converting existing models/tests to the SQLMesh format.

At the same time, I suspect that an AI properly trained with up-to-date documentation might be more creative than a standard converter, enabling conversions that better align with the developer's intent. My concern is that it could result in truncated SQL, unexpected modifications, or completely hallucinated models. I trust the team will implement the right safeguards to avoid these pitfalls.

Data warehouse cost monitoring

This is probably the feature that resonates most with my experience, as I maintain a dbt package for this exact purpose! Tracking cost per model over time and through changes is essential, and I'm glad it's on Tobiko Data's roadmap.

First-class integration of SQLMesh with Airflow and Dagster

While it's easy to get started and schedule a project with a simple CRON job, it's not the most efficient approach when projects scale. I've personally worked on integrating my company's scheduler system with our dbt setup, so I can attest that providing proper integration can be challenging. This includes managing concurrency, implementing caching for fast startup, creating deployment and rollback systems, and ensuring resilience to errors.

Having the team focus on clean integration with industry-leading orchestration tools is great! I also appreciate their understanding that many teams use existing schedulers to run jobs outside of SQLMesh, rather than forcing users to adopt a proprietary scheduler within Tobiko Cloud.

Single-Pane-of-Glass Troubleshooting UI

Troubleshooting is rarely the most enjoyable activity for developers. It often involves frustrated stakeholders and potential delays to other priorities. I've personally added BigQuery console logs to dbt debug logs for this exact purpose, as it helps understand why a previously working query might be taking too long or failing outright.

While this approach is helpful, it becomes very noisy when executing dozens of concurrent jobs. Having a proper UI in SQLMesh that easily pinpoints logs to each model and version will be a significant improvement. Additionally, related metadata like model duration or input previews could make troubleshooting much simpler.

Data warehouse migration

I appreciated the presentation by Justin Kolpak and Afzal Jasani about migrating projects across data warehouses (especially Databricks). As I've noted in previous articles, SQL transpilation is incredibly powerful for experimenting with alternatives or executing migrations. Of course, this requires proper testing and table diff capabilities (which SQLMesh provides), but it will make a significant difference when pushing these initiatives into team roadmaps and completing them on time without regressions.

SQLGlot

George Sittas also delivered a dedicated session on SQLGlot, which continues to add support for new dialects, such as Druid earlier this year. The audience particularly appreciated his commitment to addressing feature requests within 5 minutes of submission! 😁

Customer feedbacks

Joseph Lane's talk on Avios's experience was particularly interesting, as it echoed my own experience that business stakeholders don't care about technical details—they just want pipelines to work. Yet having a solid platform for these pipelines to run efficiently and reliably is essential. There's nothing more frustrating for leadership and data teams than dealing with poor data quality that leads to incorrect decisions. The frustration compounds when these issues take days or even weeks to resolve, making it difficult to justify the costs incurred by data teams and infrastructure.

SQLMesh doesn't directly fix these problems, but it enables data teams to implement best practices (like unit testing) and iterate quickly (with local validation).

Kariba Labs' presentation was fascinating, as it addressed a topic I had discussed a few weeks ago in the Tobiko Data Slack: is it possible to leverage the recent blueprinting feature to dynamically create models? The answer was... mostly no, it's not recommended. From what I understood, Kariba Labs took a different approach by setting up a "model factory" to create dozens of models from a single template!

This approach isn't entirely novel—I've seen teams do similar things with dbt—but those implementations typically require external scripts to generate model files, which is quite clunky. Seeing this capability nicely integrated within SQLMesh, thanks to its proper Python support, is definitely refreshing!

Partners

A key growth area for SQLMesh is integrations. This is an area where dbt excels, particularly with partnerships. Numerous companies support dbt as part of their data catalog or through dedicated dbt packages. It's encouraging to see companies working to integrate SQLMesh as a supported platform.

If you follow SYNQ or Tobiko Data, you might have already read about their partnership in a blog post. I hadn't deeply explored the details previously, so it was interesting to see how the platform leverages metadata to connect transformations to higher-level "data products" that help developers track the health of underlying pipelines. I've personally used Elementary Data with our dbt stack and appreciate the data observability these tools provide.

Foundational's talk highlighted their support for SQLMesh integration in their lineage compiler. This is a product category I hadn't previously encountered or considered, but it makes perfect sense to have impact analysis and related communication when changes occur to models. For instance, if a team responsible for creating tracking events changes a schema or column format, downstream teams working on customer-facing reporting should be notified of potential impacts. While strict data contracts might seem sufficient, maintaining rigidity over longer periods is challenging. Communication matters, and Foundational's products can help autonomous teams work more efficiently together over time.

I expect we'll see more companies partnering with Tobiko Data in the future for mutually beneficial relationships. Interoperability has been a major challenge in the modern data stack, and the "winners" will definitely be those that can integrate effectively with the rest of the ecosystem.

Community

Andrew Madson closed the conference by highlighting the impressive growth of the community across different channels. Of course, some platforms started with small numbers, making it easier to achieve 2-3 digit percentage growth. Nevertheless, it clearly indicates that SQLMesh is gaining traction.

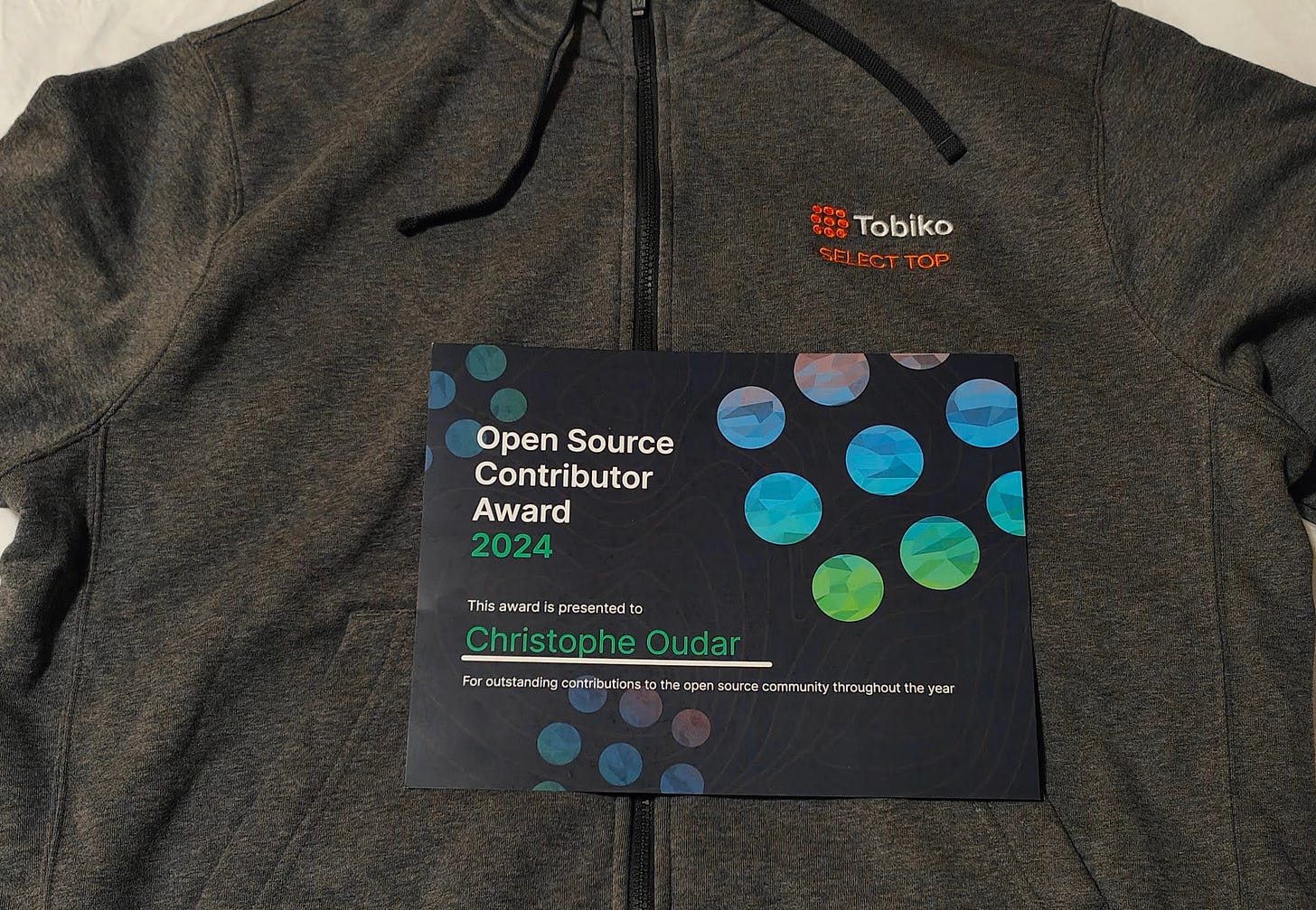

The team revealed the five contributors nominated for the 2024 community awards, which I was delighted to be part of! I was a bit disappointed to be the only nominee who made it to San Francisco this time, but I'm sure there will be future opportunities to meet.

I'm not certain I'll make the 2025 shortlist, but I have several potential contributions in mind that would be valuable if I were working with SQLMesh in production.

What else?

During the conference, I found time to discuss ideas with the team, which I'd like to share (even though they may or may not materialize):

SQLMesh isn't known for its simplicity, at least in terms of user perception during onboarding. I discussed with Andrew how he might help make SQLMesh more accessible. I'm excited to see how he develops these ideas over the coming months!

SQLMesh's partition awareness is one of its main differentiators from dbt's statelessness. I chatted with Chris about making operations on the state more user-friendly. I suggested some options based on our internal tool at Teads: Job history. I hope the team can create a first-class platform that improves the developer experience for operation backfills across complex data pipelines.

One weakness of SQLMesh is definitely its lack of proper extensibility: if you want to add a feature, you would likely contribute to the main project instead of creating a separate Python package. This is because the tool isn't designed for plug-and-play features or models in the way dbt allows with its package system. I personally believe this will become essential for building a strong community and ecosystem around SQLMesh. It's probably too early to call this a blocker, but I'm sure the team is well aware of this need.

To conclude

If you've made it to the end of this article, you now know what you missed by not attending this year's GROUP BY conference. I'm confident the team valued this effort and enjoys hosting such events, so we'll likely see more conferences from Tobiko Data in the future (perhaps in Europe?). If you're interested in discussing SQLMesh, Tobiko Data's Slack is the perfect place to start.

Special thanks to the entire team who welcomed me to the conference! This includes those I've already mentioned, as well as all the crew members and the event organizers like Florence, Marisa, and Carol. I was happy to exchange a few French words with someone there (👋 Ben).

🎁 If this article was of interest, you might want to have a look at BQ Booster, a platform I’m building to help BigQuery users improve their day-to-day.

Also I’m building a dbt package dbt-bigquery-monitoring to help tracking compute & storage costs across your GCP projects and identify your biggest consumers and opportunity for cost reductions. Feel free to give it a go!

I'm working on something exciting: a dbt adapter for Deltastream, a cloud streaming SQL engine. If you're interested in combining the power of dbt (or SQLMesh) with real-time analytics capabilities, I'd love to chat about it.